Docs

Platform

Overview

xVector is a collaborative platform for building data applications. It is powered by MetaGraph, an intelligence engine that keeps track of all resources powering data applications. Businesses can connect, explore, experiment with algorithms, and drive outcomes rapidly. A single pane of glass enables data engineers, data scientists, business analysts, and users to extract value from data collaboratively.

Data Applications comprise all resources and related actions in creating value from data. The actions performed in a Data Application include connecting to various data sources, profiling the datasets for quality issues and anomalies, enriching data for further analysis, exploring the datasets to derive insights, mining patterns with advanced analytics and models, communicating the outputs to drive outcomes, and observing the applications for further enhancements and improvements.

Concepts

The following sections describe each of the resources that constitute a data application. Each resource performs a specific function, enabling efficient division of labor and collaboration.

Workspace - Workspace provides a convenient way to visualize and organize the interactions across resources such as data sources, datasets, reports, and models that power a DataApp. Business analysts, data scientists, and data engineers have a single pane of glass to collaborate and version their work.

Read more: Workspace

Datasource - Enterprise data is available in files, databases, object stores, and cloud warehouses or is accessible via APIs in various applications. Datasource allows users to bring data from multiple sources for further processing. Data sources are in sync on a scheduled or on-demand basis. In addition to the first-party data, enterprises now have access to a large amount of third-party data to enhance the analysis.

Read more: Datasource.

Dataset - Once the data is available, users can transform and refine it using an enrichment process. Enrichment comprises functions to profile, detect anomalies, join other datasets, run a regression, classify or segment based on clustering algorithms, or manually edit numerical, text, or image data. Users can perform all actions that enable the training of models and their applications.

The delineation between a data source and a dataset allows for data traceability.

Read more: Dataset.

Model - Models enable the application of an appropriate analytical lens to the data. Supervised AI models, such as regression classification, or unsupervised models, such as clustering, allow users to tease patterns in tabular, image, or textual data. Time series models allow for forecasting. Users can extract entities, identify relevant topics, or understand sentiment in textual data.

Read more: Models

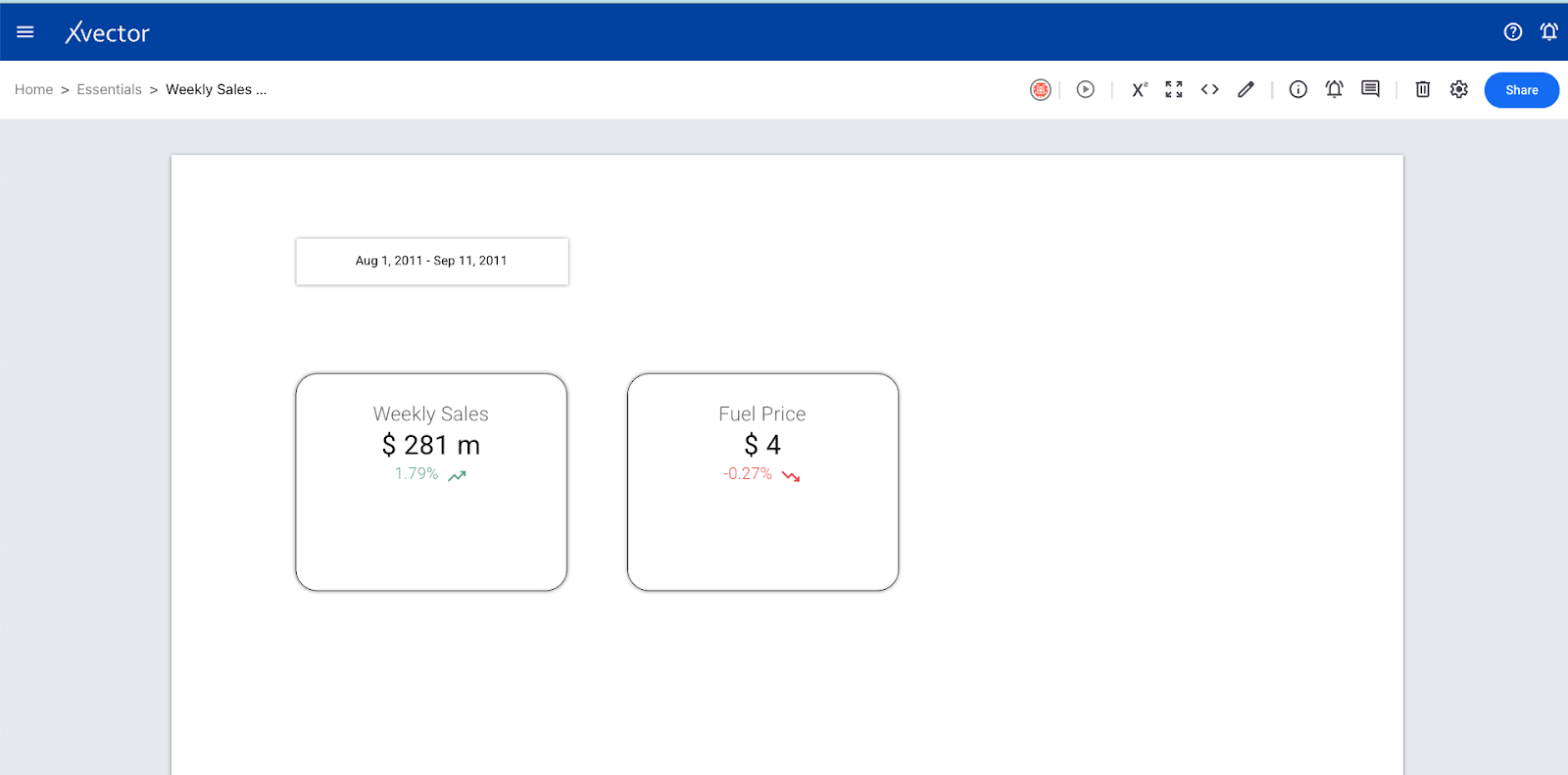

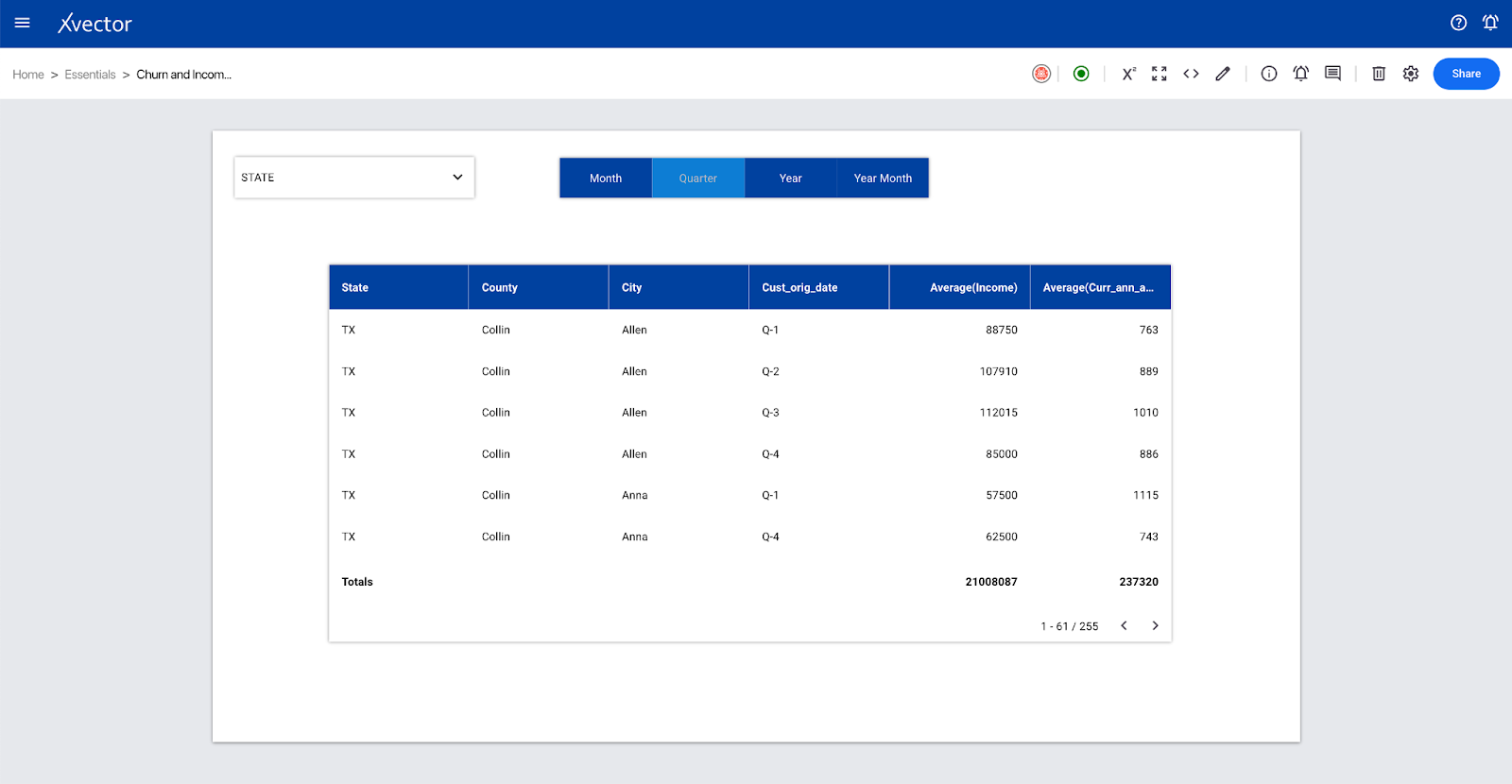

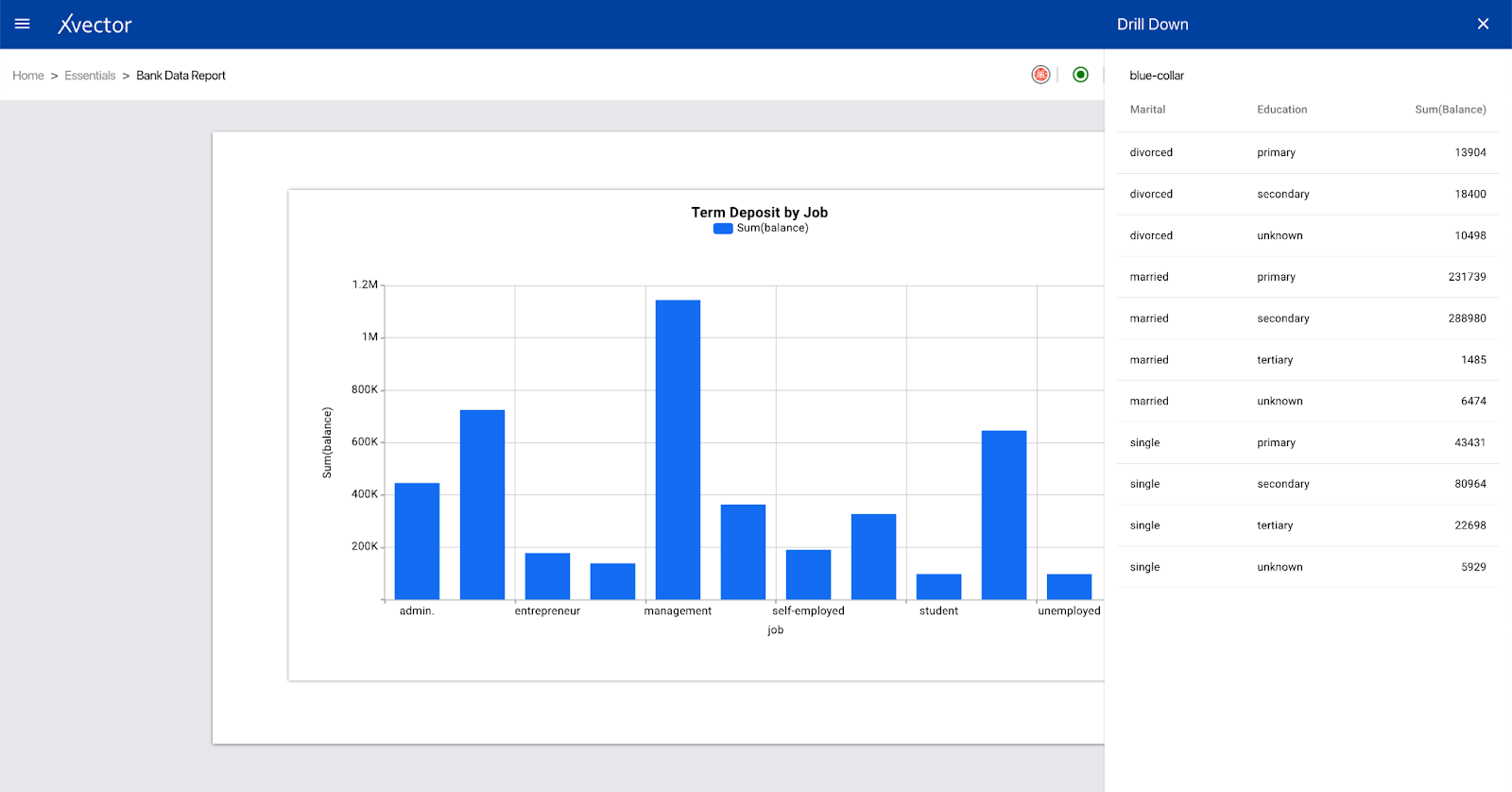

Reports—Reports provide a canvas for visualizing and exploring data. Users can build interactive dashboards, interrogate models, slice and dice the data, and drill down into details, allowing businesses to collaborate and refine their understanding of the underlying data.

Read more: Reports

DataDestination: Users can act on the insight by automating the output to be written to execution systems. For example, they identify customers who are likely to churn. In that case, they can send this data to a CRM system to deliver discounts to the customer or remediate with other actions.

Read more: Data Destination.

Prototyping and Operationalizing Data Apps

Xvector platform allows rapid prototyping using a draft server in the design phase. Business Users and Analysts can collaborate to define the data application and hand it off to the data scientists and engineers to further refine and tune for performance during the operational phase.

Users can collaborate on each resource, such as reports, datasets, and models. Users can edit, view, or comment on a resource based on their permissions. Users just need an email to start collaboration.

User groups allow for organizing and easier sharing across users.

Users can control the visibility/scope of a given resource. Making the resources public makes them visible to all users across the cluster. Each resource has a defined URL, enabling ease of sharing.

The user will need to start the draft driver in the workspace before using it. The icon to start the draft server is on the top right of the workspace, along with other admin icons. Once the draft driver is running, users can join the session and use the draft driver. While in session, a dedicated driver to that workspace is provided, enabling users to do rapid data exploration and analysis without waiting for resource provisioning. This allows for rapid prototyping with business users. Resources created in the draft driver are marked as draft and present only in memory. Once prototyping is done, the dataset can be operationalized and materialized. Upon materialization, they persist and become available as regular resources.

Version Management

DataApps are managed with the same rigor as other software applications. Versioning ensures their stability and manageability. Once a resource such as a workspace, dataset, model, or report is assigned a version, consuming applications can be guaranteed a consistent interface.

Users can experiment endlessly in draft mode. Once they like the output, they can publish the findings/resources with a version. Any further changes result in a newer version.

Versioning allows for experimentation and stability while building data applications.

Synchronization

All the resources used to build DataApp need updates. Therefore, resources have an update_policy, and policies can be OnDemand, OnEvent(*), OnSchedule, or Rules.

These policies allow users to configure a flexible and optimal way to reflect data updates. Resources can use rules to model dependencies across different resources; for example, the user might want to update a dataset only after all the upstream datasets are updated, with each dataset potentially having a different update frequency.

The synchronization process is triggered when a data source is updated. The system notifies all the dependent resources, which take the appropriate action based on the update_policy settings.

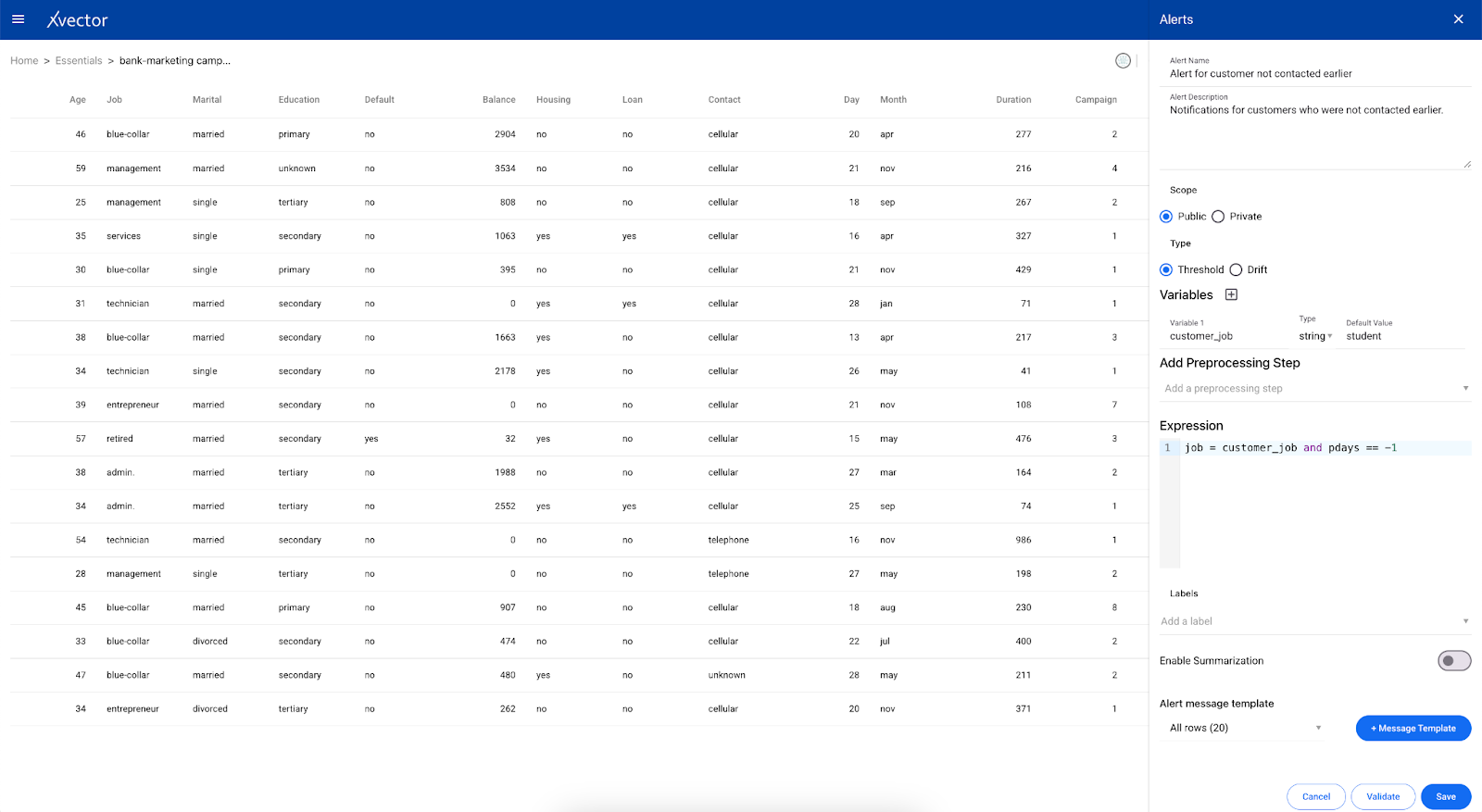

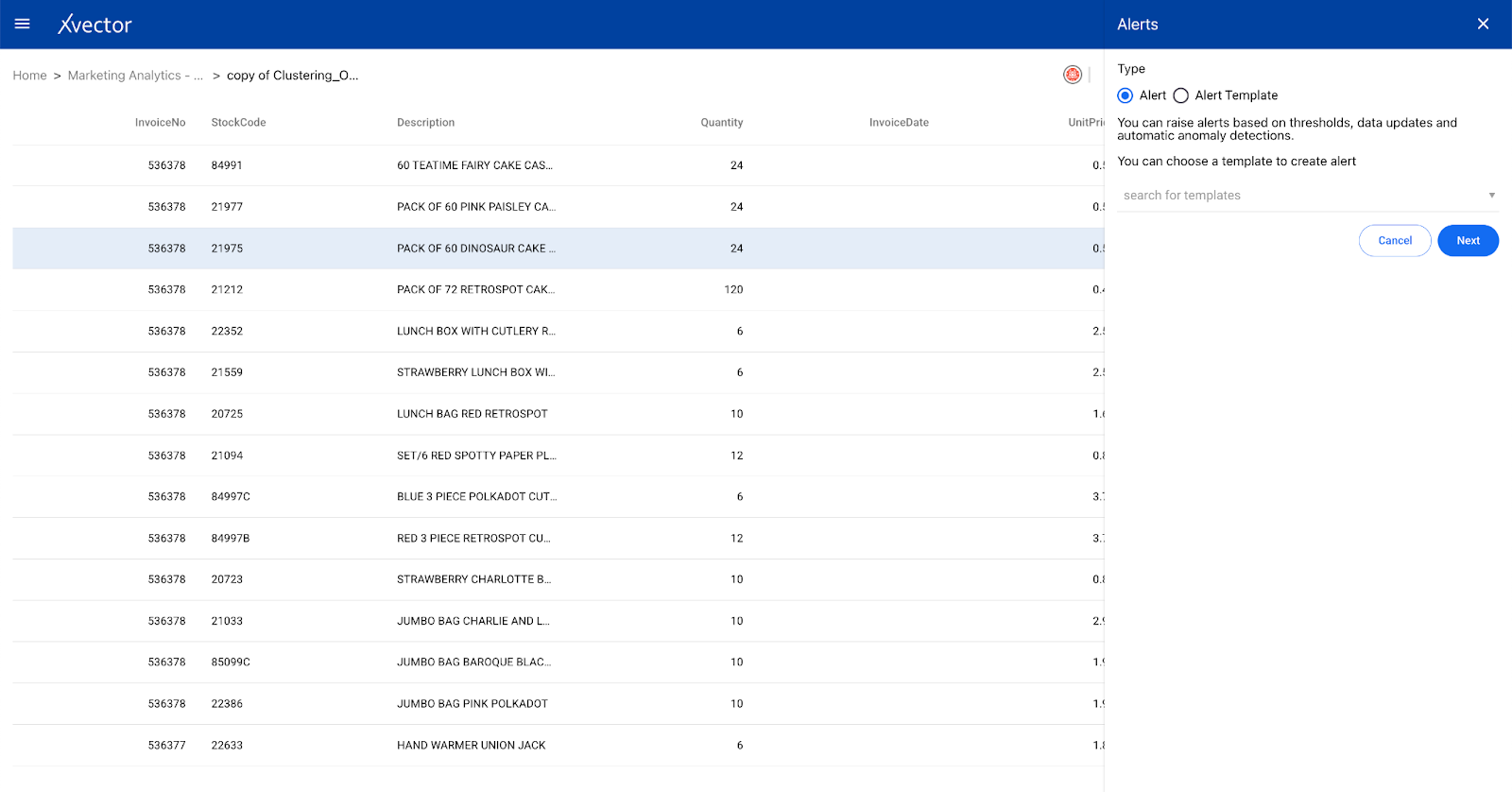

Observability

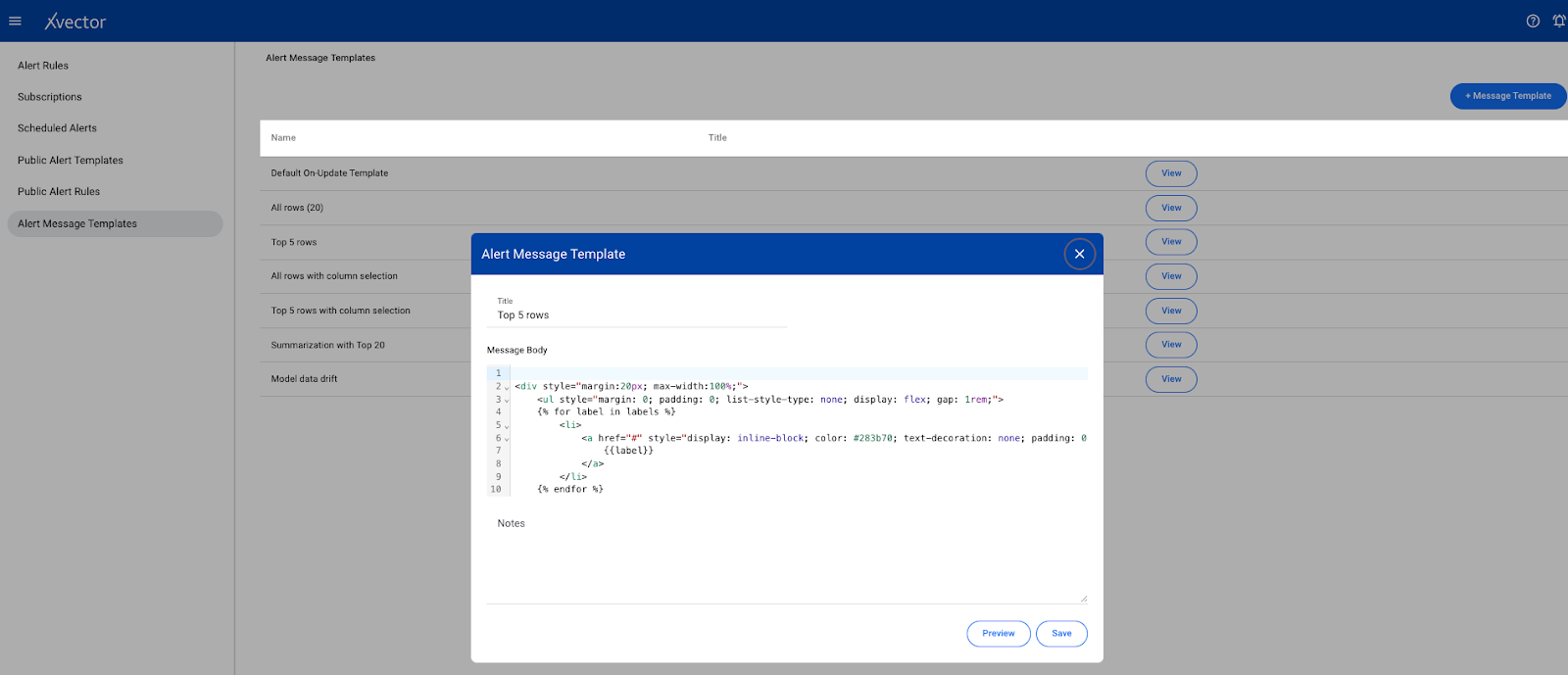

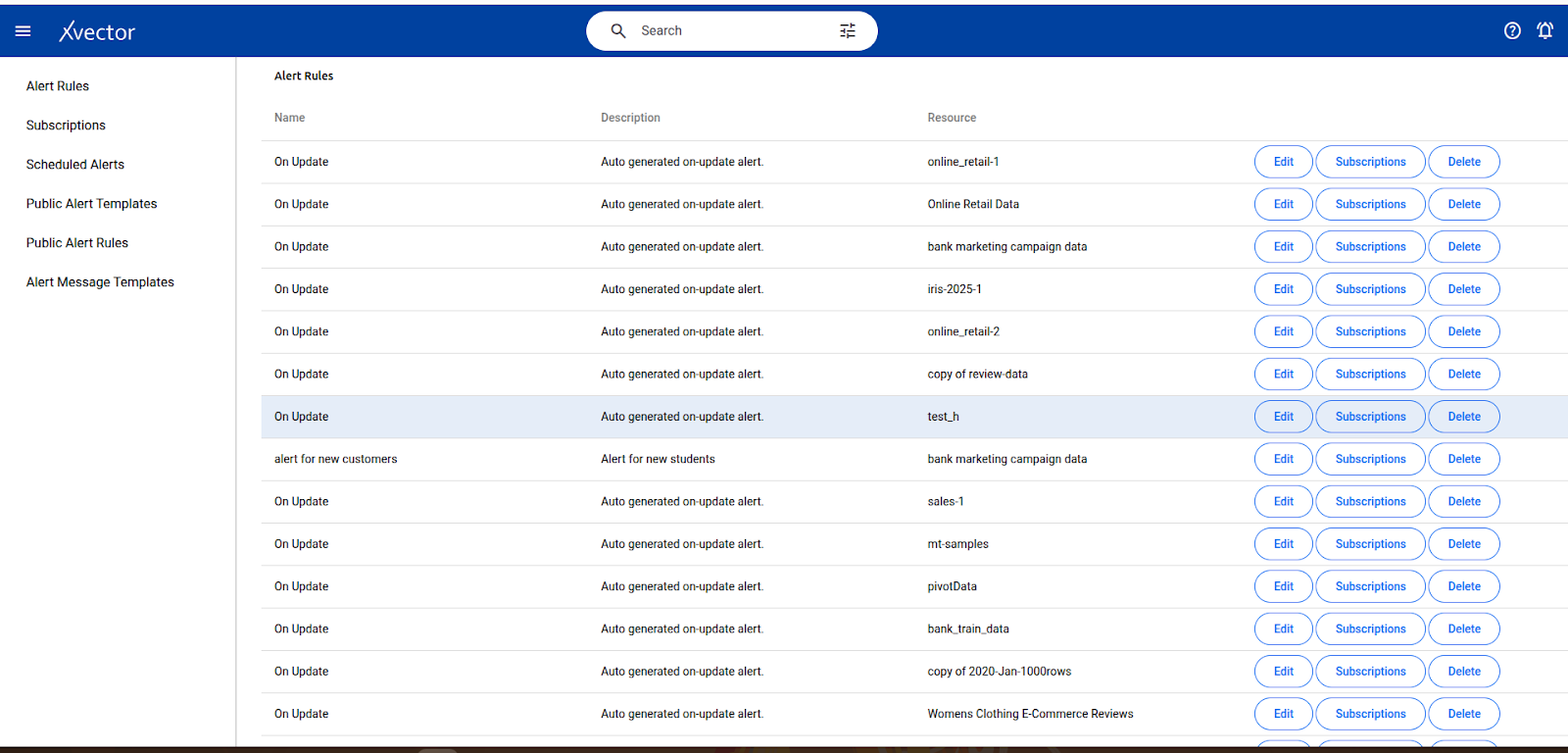

As the complexity increases due to the scale and variety of operations, manually reviewing the application for exceptions is unwieldy and potentially error-prone. Observability makes it manageable; the system detects anomalies based on rules and machine learning. Users can define alerts based on data updates, threshold rules, or anomalies.

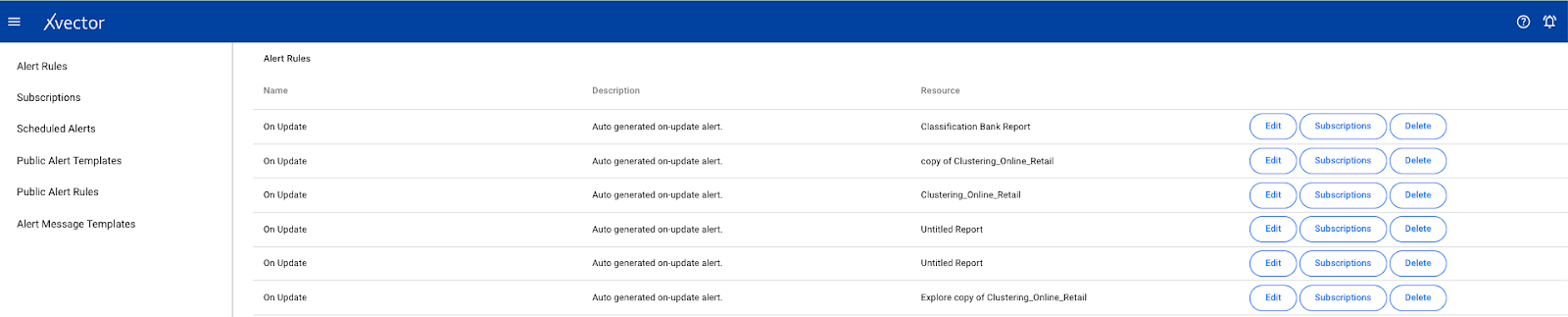

Users can monitor datasets, models, and reports by authoring alert rules. Alert rules are of the following types:

Threshold-based - for example, if the revenue > a value, please notify the user/user group. Update-based - if the underlying resource, such as a dataset, is updated, the user/user group subscribing to the alert rule is notified.

Anomaly-based - machine learning algorithms detect anomalies and notify the subscribers.

Read more: Observability

Governance (Discoverability, Monitoring, Security/Audit-trails)

Governance involves managing data used on the platform throughout its lifecycle, maintaining its value and integrity. It ensures the data is complete as required, secure, and compliant with the relevant regulations with an audit trail of activities. It provides accurate and timely data for informed decisions.

Read more: Governance (coming soon).

Platform Administration

User Management (CRUD)

- Cluster Setup and Admin

- Inviting users and permissibility

- First steps: Log in and take a brief tour of the resources page

LoginOne can log in to the platform by clicking on https://xui.xvectorlabs.com/

Users must enter their email and password on the login page and click the Login button. After logging in, the home page displays a list of resources available by default or shared by other users. For a first-time user, this list will be empty. To start, click the Add button at the top right corner of the page to create a workspace.

It is recommended to go through the documents in the Concepts section to understand the different resources and then build an app in the created workspace.

App Store

The App Store contains publicly available workspaces. Users can use these already-created apps to accelerate their process. Users can also publish their workspaces as Apps.

All available apps can be accessed by clicking on ‘Apps’ present on the home page.

Workspace

Creating a workspace

- From the homepage, click on Add and choose Workspace

- Provide details

Name - Name of the workspace

Description - write a description of the workspace

Options

Once in the Workspace, xVector provides a list of options on the top right of the screen.

- Each of the icons is described below:

- Presence: Shows users that are currently in the workspace

- Draft Server: Option to start and stop the draft server. This is used to prototype rapidly by collaborating with business users

- Data Dictionary: Helps understand the metadata of the datasets

- View as list/flow: Option to switch between list and flow type view of workspace resources.

- Auto layout: Automatically arranges all the resources in the workspace

- Grid lines: Displays vertical and horizontal lines for arranging resource cards/nodes manually

- Hide comments: To hide the comments on the workspace

- Add comments: Option to add new comments

- Settings: Modify workspace details

- Name -> name of the workspace

- Description -> Description of the workspace

- Image -> This takes an image url which will be displayed in the workspace card

- Snapping -> for arranging the resource cards in the workspace

- Making the workspace a template -> to make the workspace a template

- Draft driver Settings -> Select the machine specifications of the draft driver you would like to use

Deleting a workspace

- Go back to the home page by clicking on “xVector” on the top left of the screen.

- Identify the workspace that needs to be deleted and click on the ellipses for that workspace.

- Click on delete in the menu options.

Datasource

Data Source

In today's data-driven world, enterprise data is scattered across diverse landscapes - files, databases, object stores, cloud warehouses, and APIs embedded within various applications. At xVectorlabs, transforming this fragmented information into actionable insights begins with Data Sources.

The Gateway: Data Sources

Data Sources are the gateway, allowing users to connect to, import, and synchronize data from multiple origins. Whether the data resides in structured files, dynamic APIs, or sophisticated cloud storage systems, users can configure and execute a connector to bring it into xVectorlabs as a data source. A rich catalog of connectors, periodically updated by xVectorlabs, ensures compatibility with an ever-expanding array of systems. Missing a connector? Users can reach out to connectors@xvectorlabs.com, and a new one can be developed quickly to meet their needs.

Once connected, the process doesn’t stop at simply importing data. Updates from source systems are seamlessly UPSERTED, reflecting real-time changes while preserving the historical timeline of values. Bulk data import is supported with the OVERWRITE option. This meticulous synchronization ensures traceability, enabling businesses to trust the integrity and provenance of their data.

A Seamless Experience

xVectorlabs simplifies the journey from raw data to actionable insights, offering users the tools to acquire, refine, and analyze data confidently. With regular updates to its connectors catalog and robust metadata management, the platform ensures that businesses can harness the full potential of their data ecosystem - turning scattered information into cohesive narratives that drive impactful decisions.

Connectors

Available connectors based on type

- Files

- CSV

- JSON

- GZIP

- Databases

- MySQL

- SQL Server

- PostgreSQL

- MongoDB

- Object Stores

- S3

- Minio

- Cloud Data Warehouse

- Amazon Redshift

- Google Big Query

- API

- Salesforce

- Mailchimp

- Zoho

Common Features

There are some settings for data sources that are very useful and necessary. Read on to find out more about them.

Metadata

xVector automatically infers metadata using sampling techniques while creating a datasource. It is recommended that the metadata be reviewed carefully and any corrections and changes made if required. Metadata setting is a crucial step.

Available settings in metadata:

- COLUMN NAME

The same name that is present in the source datasource

- COLUMN New NAME

The new name that is given to a column in the dataset when it is copied from the datasource

- DESCRIPTION

Enter any description for a column. This description will be available to other users with access to the datasource and get copied into datasets created from this data source.

- DATA TYPE

xVector automatically infers the data type of the column using a sampling technique. It is recommended that the data type be reviewed for any potential errors. xVector infers int, float, string, and date-type columns automatically.

- FORMAT

The format will be applicable for data types such as datetime and currency. For datetime data, one can choose from different format options like ‘YYYY-MM-DD’, ‘DD/MM/YYYY HH:MM: SS,’ etc. The data will not be changed; this setting is only for the visualization perspective.

- SEMANTIC TYPE

You can choose an appropriate semantic type for the column. For example, if the column's data type is int, then semantic types such as SSN, zip code, etc., will be available. These settings are used in visualization.

- STATISTICAL TYPE

Choose from the options provided. This is again used for visualization and modeling purposes. In xVector, metadata is used extensively throughout the platform.

- SKIP HISTOGRAM

It is recommended that the default setting be kept. If the SKIP HISTOGRAM is false (default), xVector generates a histogram for the column. The other profile information is generated. You can set it to True cases where the cardinality of the column is very large and may result in computation load.

- NULLABLE

The default setting is NULLABLE and is set to TRUE. If the column value can not be null, change the setting to FALSE. Turning the slider off will set it to FALSE. xVector will throw a warning if a non-nullable column is found to have null values during the connection process.

- DIMENSION

Set the column as either a dimension or a measure. Again, this information is used in visualization and modeling.

- MEASURE

Set the column as either a dimension or a measure. Again, this information is used in visualization and modeling.

Profile

Data profiling involves examining data to assess its structure, content, and quality. This process calculates various statistical values, including minimum and maximum values, the presence of missing records, and the frequency of distinct values in categorical columns. It can also identify correlations between different attributes in the data. Data profiling can be automated by setting the profile option to true or performed manually as needed.

CSV

A CSV-type datasource is used when one needs to bring CSV data from a local system or machine. Follow the below process for creating this datasource-

- From a workspace, click on Add and choose the Datasources option.

2. Choose the CSV data source type from the available list.

3. Go through the steps:

a. Configure

- Choose File: Click on the Choose File button and browse your local system to select the required CSV file.

- Name: Provide a meaningful name

- Delimiter: Choose a delimiter according to the file

- Header: Set to true if the header is present in the file, otherwise set to false.

b. Advanced

- Workspace - Select a workspace for datasource

- Encoding - Select encoding

- Quote - Sets a single character used for escaping quoted values where the separator can be part of the value.

- Escape - Sets a single character used for escaping quotes inside an already quoted value.

- Comment - Sets a single character used for skipping lines beginning with this character. By default, it is disabled.

- Null value - Sets the string representation of a null value.

- Nan value - Sets the string representation of a non-number value.

- Positive Inf - Sets the string representation of a positive infinity value.

- Negative Inf - Sets the string representation of a negative infinity value.

- Multiline - Parse one record, which may span multiple lines, per file.

- Mode - permissive, drop malformed

- Quote Escape Character - Sets a single character used for escaping the escape for the quote character.

- Empty Value - Sets the string representation of an empty value

- Write Option - Upsert, Bulk Insert, Insert, Delete

- Run Profile - Set to true for running profile

- Run Correlation - Set to true for running correlation

- Machine Specifications - use default or set to false for providing machine specifications

- Expression - write expressions to be executed while reading the data

c. Preview Data - display a sample of data

d. Column Metadata - view the automatically inferred metadata and modify it if needed.

Scrolling to the right gives the following:

e. Table Options -> (Record Key, Partition Key, ...)

4. Click on Save

S3

An S3 datasource is used to bring data from the AWS S3 bucket. One needs to have an AWS access key and a secret key for getting the data from the S3 bucket.

Follow the below process for creating this datasource-

- From a workspace, click on Add and choose the Datasources option.

2. Choose the S3 data source type from the available list.

3. Go through the steps:

a. Configure

- Datasource name - provide a meaningful name for the data

- Saved accounts - can select for already created accounts

- New account name - provide an account name for creating a new account

- AWS access key ID - provide the AWS access key ID

- AWS secret key - enter the AWS secret key

- The IP address for allowed IPs - shows the allowed IPs list

- File type - choose file type (CSV, JSON)

- Test Connection - Click on the button to test the connection with the provided credentials

- Extension - choose file extension (CSV, JSON, gzip)

b. Advanced

- Workspace - choose from available options

- Bucket - select the source S3 bucket

- Prefix for files - provide prefix for files

- Choose folder - choose files from the s3 bucket

- Header - Set to true if the header is present in the file, otherwise set to false.

- Delimiter - Choose a delimiter according to the file

- Encoding - Select encoding

- Quote - Sets a single character used for escaping quoted values where the separator can be part of the value.

- Escape - Sets a single character used for escaping quotes inside an already quoted value.

- Comment Sets a single character used for skipping lines beginning with this character. By default, it is disabled.

- Null value - Sets the string representation of a null value.

- Nan value - Sets the string representation of a non-number value.

- Positive Inf - Sets the string representation of a positive infinity value.

- Negative Inf - Sets the string representation of a negative infinity value.

- Multiline - Parse one record, which may span multiple lines, per file.

- Quote Escape Character - - Sets a single character used for escaping the escape for the quote character.

- Empty Value - Sets the string representation of an empty value

- Write Option - Choose from Upsert, Bulk Insert, Insert, Delete

- Expression - write expressions to be executed while reading the data

c. Preview Data - display a sample of data

d. Metadata - view the automatically inferred metadata and modify it if needed.

Scrolling to the right gives the following screenshot:

e. Table Options - Record Key, Partition Key

4. Click on Save

A status screen will come up. One can track the status of the process. On completion, it will redirect to the workspace page.

Dataset

Dataset Overview

Data is the foundation of every insightful analysis, yet its raw form often lacks the structure and clarity needed for effective decision-making. Within xVectorlabs, raw data enters the system from various sources like transactional databases, APIs, logs, or third-party integrations. However, before it can be leveraged for exploratory analysis, machine learning, or operational reporting, it must be refined and structured into a more usable format.

This journey from raw data to a structured dataset involves multiple steps, ensuring accurate and insightful information. The process begins with ingestion, where data is pulled into the system (as seen in the Datasource document), followed by profiling and metadata definition, which help uncover patterns, inconsistencies, and key attributes. Following this, enrichment techniques enhance the data, making it more relevant and applicable for downstream applications.

Refining the Raw: Datasets

As data flows into the system, it becomes a Dataset - a structured, enriched version ready for analysis and modeling. This transformation begins with profiling, where metadata is extracted, and each column is classified based on its data type, statistical type (categorical or numerical), and semantic type (e.g., email, URL, or phone number). This metadata provides context, shaping downstream processes like exploratory analysis or machine learning model training.

The enrichment process brings data to life. Users can leverage an extensive toolkit to:

- Profile the data and detect anomalies.

- Join datasets to discover relationships.

- Entity extraction/NLP and other models apply related functions.

- Manually edit numerical, text, or image data for precise refinements.

This step bridges the gap between raw data and actionable insights, enabling users to prepare datasets that fuel the development of robust models and their real-world applications.

The Power of Traceability

The clear distinction between a Data Source and a Dataset isn’t just about workflow organization - it’s about traceability and trust. By maintaining the data lineage, users can always trace back to the source, ensuring transparency in processing and confidence in decision-making.

Building and Enriching Datasets

Users can create datasets from the data sources; metadata is copied along with the data. They can apply various transformations to the data, and the resulting data is persisted with an appropriate policy, such as OVERWRITE or UPSERT. Users can define the pipelines based on their requirements. If they need to access the update timeline, UPSERT would be an appropriate update policy. Furthermore, users can set up synchronization properties that suit the use case, such as on-demand, schedule, or event.

A dataset is derived from a data source and transformed to meet the needs of the DataApp. Data sources are immutable, establishing the provenance of various computations to the source systems.

Users enrich the dataset using a series(flow) of functions (actions) such as filters/aggregates and filters. They can also apply trained models to compute new columns. For example, they can use a classifier to identify customers who churn to the latest order data. Users validate and save the logic. Once saved, they can apply the changes to the original dataset and materialize the new dataset on the disk using the Materialize action. Users must be cognizant of actions that change the dataset's structure, such as joining or aggregating; if the actions change the structure, OVERWRITE is the recommended update policy.

While enrichment functions and our generative AI agent can suffice for business analysts, data engineers might prefer to transform data programmatically. Custom functions allow advanced users to author functions to alter the dataset programmatically.

Creating a Dataset

- From a workspace, click on Add and choose the Datasets option

Or choose Create Dataset from the menu options of a Datasource.

2. Configure

- Select datasource - select datasource from the available list

- Type - choose type of the dataset

Datasets are of the following types,

- Fact (default) indicates that the dataset is primarily a collection of facts - for example, orders, shipments, inventories, etc.

- Entity indicates a collection of entities such as suppliers, customers, partners, distribution centers, facilities, channels, and other core entities around which the data apps create new analytical attributes, for example, a customer segment. These entity tables are used for filtering and some lightweight mastering to facilitate easy translation and transmission of data to other enterprise systems that need the information to operate on the decisions enabled by the data app.

- Entity hierarchy captures hierarchical relationships among the entities, which is useful in filtering reports.

3. Advanced

- Dataset name - provide a name to the dataset

- Machine Specification - use default or set to false for providing machine specifications

- Table Options - (Record Key, Partition Key, ...)

4. Select Workspace - select a workspace for the dataset.

Once the dataset is created, there are several options to choose from to explore and enrich the dataset. These options can be accessed from 2 different places. One from the workspace and the second from the dataset page. Below are the details.

Enrich: Workspace View

Click on the vertical ellipses or kebab menu of the dataset tile in the workspace. Following are the options:

View

To view the data. Takes you to the dataset screen.

Update Profile

To update profile. This will need to be run each time a dataset is created or updated to view the profile

View Profile

To view the profile of the data. This can be viewed only after the “update profile” is run at least once after creating or updating the dataset.

Create copy

Creates a copy of the data

Generate Report

Generates an AI powered report dashboard. This can be used as a starting point by the user and reports can then be edited.

Generate Exploratory Report

Generates an AI powered report to explore the dataset.

Generate Model

Generates an AI powered model by choosing the feature columns and automatically optimizing the training parameters based on your prompt. This can used as the starting point and the user can update parameters/metrics to generate more experiments/runs.

Activity Logs

Shows the list of activities that occurred on that dataset

Map Data

In the Workspace, click on the ellipses on the dataset tile (tile with the green icon) to see the following options:

This is to do the mappings of column metadata from source to target (used in data destination)

Sync

Synchronizes the dataset with the data source.

Update

Updates the data as per source

Materialize

To persist the dataset in the filesystem

Publish

To publish the data. It will assign a version to it and once published will have a guaranteed interface.

Export

Writes the data to a target system (points to data destination)

Settings

Opens the settings tab for the dataset

Delete

Option to delete the dataset

Enrich: Dataset View

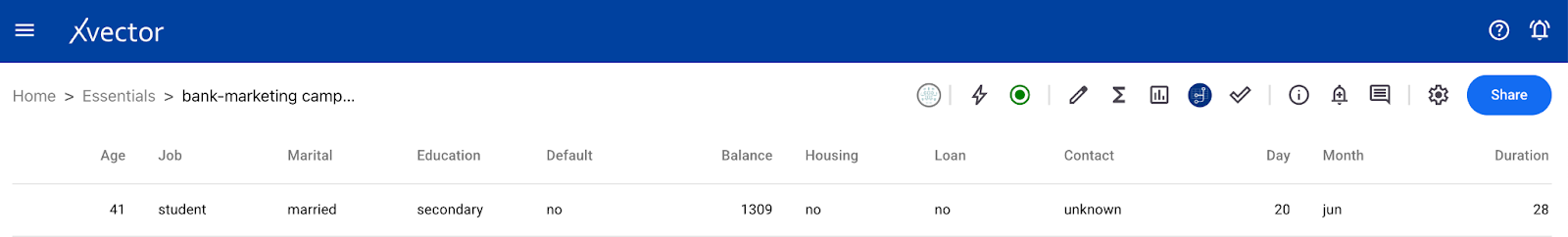

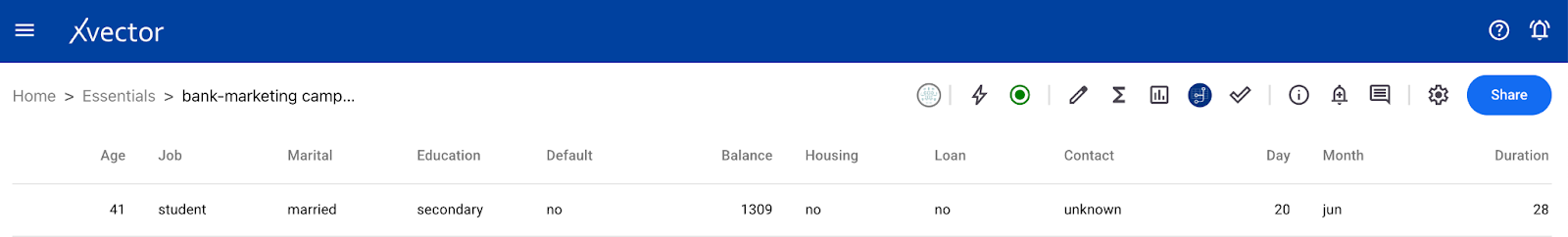

Double click on the dataset tile in the workspace to get to the dataset page or click on the view option on the vertical ellipses of the dataset tile in the workspace. This will take you to the dataset view page.

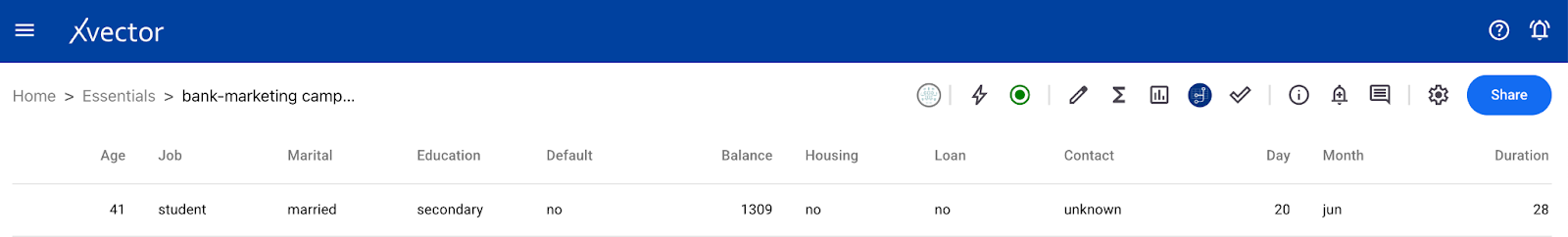

Below is the screenshot of the dataset view page and the description of all the features that appear on the top right of the page starting from left to right.

Presence

Shows which user is in the workspace. There could be more than one user at a given time.

GenAI

The ability for users to ask data-related questions in natural language and get an automatic response. For example, the user could ask the question, “How many unique values does the age column have?”. GenAI would respond with the number of unique values for that column.

Driver (play button)

Starts or shutdown dml driver for the dataset

Edit Table

Ability to search or filter each of the columns in the dataset

Data Enrichment

Option to add xflow action or view enrichment history. A more detailed description of each of the enrichment functions is below here.

Profile and Metadata Report

- Column Stats - shows histogram and statistical information about the data

- Correlation - shows the correlation matrix

- Column Metadata - metadata of the dataset

Data profiling involves examining data to assess its structure, content, and overall quality. This process calculates various statistical values, including minimum and maximum values, the presence of missing records, and the frequency of distinct values in categorical columns. It can also identify correlations between different attributes in the data. Data profiling can be automated by setting the profile option to true or performed manually as needed.

Write back

The user can write the data to a target system

Reviews and Version control

Ability to add reviewers and publish different versions of the resource

Action Logs

Shows the logs of action taken on that dataset

Alerts

Option to create, update, or subscribe to an alert. A more detailed description of alerts can be found here.

Comments

Users can add comments to collaborate with other users.

Settings

- Basic

- Name - update name of the dataset

- Description - update the description for the data

- Workspace - select workspace from available options

- Type - type of the dataset (entity or fact)

- Advanced

- Spark parameters

- Synchronization - set to true or false as required. This is used to update/sync the dataset with its source. Users can define policies and actions for synchronization-

- Policy Type - on demand, on schedules, rule-based

- Write mode - upsert, insert, bulk insert

- Update Profile

- Anomaly detection

- Alerts

- Share - to share the dataset with other users or user groups

- View - to view the data

Enrichment (Σ)

The dataset is enriched using a series(flow) of functions such as aggregates and filters. Users can also apply models trained to compute new columns. Advanced users can author custom functions (*) to manipulate the data.

Getting to Enrichment Functions

- Once you are in a Workspace, click on the ellipses of the dataset (tile with green icon)

- Click on view (as shown below)

Or

- Once you are in a Workspace, double-click on the dataset

- This will take you to the dataset view screen with icons on the top left.

- For running any enrichment function, the DML driver needs to be up and running (green dot in the icons). DML driver can be started and stopped using the play-button icon on the data view page.

- Once on the dataset “view” page, click on the sigma icon - Σ

- Click on Add an action and choose the function from the dropdown list.

The following sections will describe each of the enrichment functions.

Aggregate

This action is used to perform the calculation on a set of values and returns a single value such as SUM, Average, etc.

Follow these steps to perform aggregate:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose aggregate

- Provide details:

- Select columns to group by - select columns that require grouping

- Add columns and aggregation - add columns with a corresponding aggregation function

- New column name - provide a name for aggregated columns

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example:

We will aggregate German Credit Data. We want to get the sum of credit and total duration grouped by different columns - (risk, purpose, housing, job, sex)

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose aggregate

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset.

Author Function

The “author” function can be used when writing a custom function to be applied to a dataset.

Follow these steps to perform the author function:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose the author function

- Provide details:

- Function name - provide the name of the custom function

- Launch notebook - this will launch a notebook server. Update the custom function provided in the default template file with the required function. Click ‘save’ under the xVector menu option.

- Validate - click on validate to verify the custom function

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example:

For example, we will use bank marketing campaign data. We want to categorize the individuals into three groups - student, adult, and senior depending on age. For this, we will author a custom function and run it on the dataset.

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose the author function

- Provide details and click on Launch Notebook

- Click save under the xVector menu option

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Custom_sql (Coming soon…)

Datatype

This action is used to change the data type of a column in the dataset.

Follow these steps to perform data type change:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose datatype

- Provide details:

- Select Column - select a column for which you want to update the data type

- Select new type - select new type from the available list

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

For example, we will use autoinsurance_churn_with_demographic data. There is a column “Influencer” as a String and we will update this to an integer.

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose datatype

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Delete_rows

This action is used to delete rows of a dataset based on some condition.

Follow these steps to delete rows -

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose Delete rows

- Provide details-

- Expression - write the conditions for deleting rows

- Notes - add notes to describe the action taken

- SQL Editor - one can write SQL expressions for deletion condition

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

We have one dataset - medical_transcription_with_entities with column ‘predicted_value’. We will delete rows having an empty list ([]) in the predicted_value column.

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose Delete rows

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Drop_columns

This option is used to remove columns from the dataset.

Follow these steps to delete rows -

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose drop_columns

- Provide details-

- Select columns for deletion

- Notes - add notes to describe the action taken

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

We have one dataset - ‘datatype update on autoinsurance_churn with demographic’. We will delete columns - ‘has_children’ and ‘length_of_residence’ from this dataset.

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose drop_columns

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Dropna

This option is used to remove missing values from the dataset.

Follow these steps to delete rows -

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose dropna

- Provide details-

- Select condition-

- Any: If any NA values are present, drop that row.

- All: If values for all columns are NA, drop that row.

- Select columns: for dropping rows, these would be a list of columns to include.

- Notes - add notes to describe the action taken

- Select condition-

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

We have one dataset - ‘datatype update on autoinsurance_churn with demographic’. We will delete rows where - the ‘latitude’ and ‘longitude’ column values are not present.

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose dropna

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Explode

Explode function is useful to convert an array/list of items into rows. This function increases the number of rows in the dataset.

Follow these steps to perform explode:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose explode

- Provide details:

- Select column - select column with list objects

- New column name - provide a name for the new column

- Drop column - to drop the source column after the operation

- Explode JSON - to extract data from JSON

- Notes - add notes to describe the action taken

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

We have one dataset - medical_transcription_with_entities with column ‘medical_speciality’. We will perform an explode action on medical_speciality to extract values from the list. This will increase the number of rows.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose explode

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Fillna

This action is used to replace null values in a dataset with a specified value.

Follow these steps to perform fillna-

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose fillna

- Provide details-

- Select Column - select column

- Fill value - enter values to replace null

- Fill method - choose from available options

- Notes - enter text to describe the action taken

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

We have autoinsurance_churn_data joined with demographic data. This has a City column with null values. Let’s replace the null value with the string - ‘Not Available’.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose Fillna

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Filter

The filter function is used to extract specific data from a dataset on a set of criteria.

Follow these steps to perform the filter:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose filter

- Provide details:

- Expression - write SQL expression for filter condition

- Notes - keep not describing the step or action

- Validate - click on validate to verify the expression

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

We will use Walmart Sales data to perform filter operation. We will filter records for Store 1.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose filter

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Join

This action is used to join two datasets based on a particular column.

Follow these steps to add a new column:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose join

- Provide details:

- Select the right dataset - select the dataset to be joined as the right table

- Select join type - select the type of join

- Select columns from the table - select columns from both tables

- Expression - write the expression for the selected join

- Validate - click on validate to verify the expression

- Save - to run the action on the dataset

- Materialize/Materialize Overwrite - to persist modified data

Example

For example, we will be performing a join operation on the autoinsurace_churn dataset with the individuals_demographic dataset. Here, the left dataset is autoinsurance_churn, the right dataset is individuals_demographic and the column for joining is individual_id present in both datasets.

- Open the view page of the dataset and click on the Sigma icon

- Click on Add an action and choose join

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

JSON Normalize

This action is useful to extract data from JSON objects to structured data as columns.

Follow these steps to perform json_normalize:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose json_normalize

- Provide details:

- Select column - select column having JSON object

- Prefix - provide a prefix for new columns

- Keys to Project - provide keys of JSON to be extracted to a new column

- Range of indices to extract - enter indices range for extraction. It takes all if the range is not provided.

- Drop column - to drop the source column after the operation

- Notes - add notes to describe the action taken

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

For example, we will be performing json_normalize on Clothing E-commerce Reviews data. This dataset contains JSON data in the predicted_value column. We will extract ‘polarity’ and ‘sentiment’ keys data from the JSON data to create new columns.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose json_normalize

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Model Apply

This action is used to apply a trained model to the dataset. For this function, the trained model needs to be deployed and running. This produces a new column with values predicted by the model.

Follow these steps to perform the model apply:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose model apply

- Provide details-

- Select model - select a deployed model from the available list

- Predictor Column name - provide a name for the prediction column

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

We will perform model_apply on Clothing e-commerce review data. A model for analyzing sentiments has already been deployed, and we will use this to predict the sentiments on the reviews data.

- Open the view page of the dataset and click on the Sigma icon

- Click on Add an action and choose model_apply

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

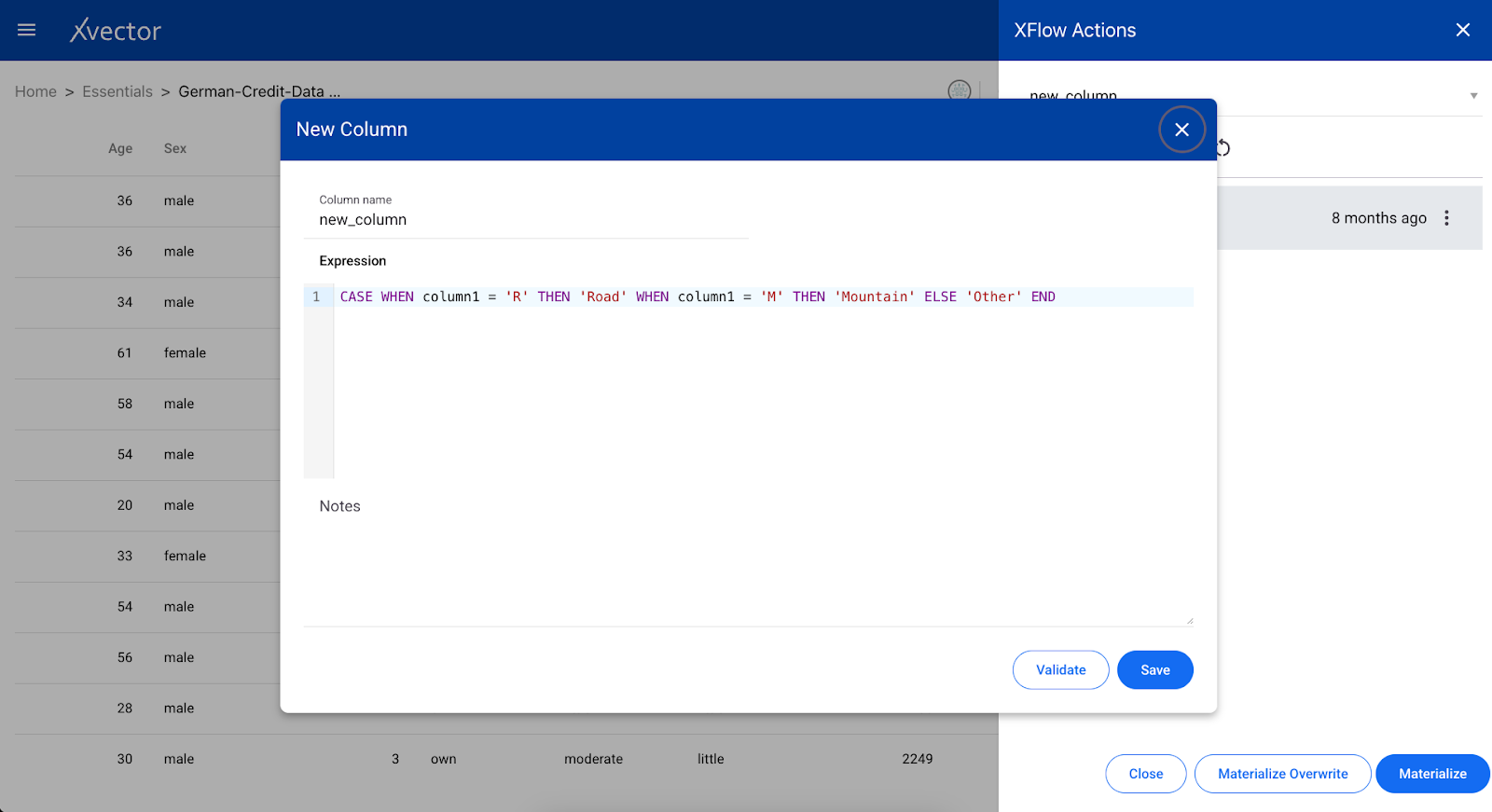

New_column

This action creates a new column in the dataset based on the provided expression. One can create a new column based on the values of other existing columns.

Follow these steps to add a new column:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose new column

- Provide details:

- Column name - a meaningful name for the new column

- Expression - expression for a new column

- Notes - text field to describe the action

- Validate - click on validate to verify the expression

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

For example, we are using German_Credit_Dataset. This contains a column ‘Age’ which is numerical. We will create a new column ‘Age_Category’, where we categorize individuals as

Student (age < 18), Adult (18 < age < 60), and Senior (age > 60) using the ‘Age’ column.

- Open the view page of the dataset and click on Sigma icon

- Click on Add an action and choose new_column

- Provide details for a new column

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Pivot

A pivot function is a data transformation tool used to reorganize data in a table from rows to columns. This function requires selecting a pivot column (categorical column), value columns (numerical column), and an aggregate function. The pivot column becomes the basis for the new rows in the pivoted table.

Follow these steps to perform the pivot-

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose pivot

- Provide details-

- Pivot Column - select a categorical column for pivot

- Value column - select a numerical column

- Aggregate - select the aggregate function from the available list

- Group By - select options to group by

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

For example, we will apply pivot action on trend chart data. Here, we want to know the total volume of products in different regions grouped by company and brand.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose pivot

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Split

The Split column function can be used to split string-type columns based on a regular expression.

Follow these steps to perform the split:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose split

- Provide details:

- Select column - select column for splitting

- Regex - Enter the appropriate regular expression to be used for splitting in the pattern. One can enter only a delimiter for splitting string with some delimiter

- Enter column names - Enter column names for post-split columns. For example, if the regular expression you provided results in 2 strings which have to be converted into 2 columns then provide two column names.

- Drop source column - to drop the source column after the operation

- Notes - add notes to describe the action taken

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

For example, we will be performing a split action on autoinsurace_churn with demographic data. It contains a column ‘home market value’ which shows a range of values as a string (2000 - 3000). We will split this on ‘-’ to get lower range and higher range values.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose split

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Union

Union is used to add rows to the dataset

Follow these steps to perform union-

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose union

- Provide details-

- Select dataset for union - select dataset to perform union

- Notes - add notes to describe the action taken

- Allow disjoint append - If one wants to merge the datasets having different columns then check ‘Allow Disjoint Append’. The columns that do not match NULL values will be used appropriately while doing the Union operation

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

We are applying union on sales data for store 5 and appending records from sales data for store 1. This will result in a dataset with sales data for stores 1 and 5.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose union

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Unpivot

A pivot function is a data transformation tool used to reorganize data in a table from rows to columns. This function requires selecting a pivot column (categorical column), value columns (numerical column), and an aggregate function. The pivot column becomes the basis for the new rows in the pivoted table.

Unpivoting is the process of reversing a pivot operation on data. It takes data that's been summarized into columns and spreads it back out into rows.

Follow these steps to perform unpivot:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose unpivot

- Provide details:

- Unpivot columns - select columns to unpivot

- Name of the unpivoted column - provide the name for the unpivot column

- Values column - provide the name for the values column

- Drop null values - set to true if null values need to be deleted

- Notes - enter notes for describing the action taken

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

- Materialize/ Materialize overwrite - to persist modified data

Example

For example, we will apply to unpivot action on pivoted trend chart data.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose unpivot

- Provide details

Upsert

Upsert is used to add rows that are not duplicates to the dataset. The difference between upsert and union is that union appends all the rows from the new dataset to the existing dataset. Upsert, on the other hand, will append only those rows that are not already there in the existing dataset.

Follow these steps to perform union-

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose union

- Provide details-

- Select dataset for upsert - select the dataset to perform an upsert

- Notes - add notes to describe the action taken

- Allow disjoint append - If one wants to merge the datasets having different columns then check ‘Allow Disjoint Append’. The columns that do not match NULL values will be used appropriately while doing the Upsert operation

- Validate - click on validate to verify the inputs

- Save - to run the action on the dataset

Example

We are applying upsert on sales data for store 5 and appending records from sales data for store 1. This will result in a dataset with sales data for stores 1 and 5.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose upsert

- Provide details

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- There is no need to materialize as Upsert will directly write to the table.

Window

This action is used to perform statistical operations such as rank, row number, etc. on a dataset and returns results for each row individually. This allows a user to perform calculations on a set of rows preceding or following the current row, within a result set. This is in contrast to regular aggregate functions, which operate on entire groups of rows. The window is defined using the over clause (in SQL), which specifies how to partition and order the rows. Partitioning divides the data into sets, while ordering defines the sequence within each partition.

Follow these steps to apply the window function:

- Click on the enrichment function (represented as a sigma icon - Σ)

- Click on Add an action and choose window

- Provide details:

- Select columns to partition by - select column for partitioning

- Expression - write an expression with the required aggregate function

- Select columns to order by - select columns for ordering

- Define row range - define row range for the window (start, end)

- New column name - provide column name

- Notes - keep notes for details

- SQL Editor - the whole window operation can be written as an SQL statement

- Validate - click on validate to verify the expression

- Save - to run the action on the dataset

- Materialize/Materialize Overwrite - to persist modified data

Example

We will consider Walmart's Weekly Sales data to perform the window function. In this, we want to know the sum of weekly sales for different Holiday Flag, ordered by Date considering the window starting from the first row to the current row.

- Open the view page of the dataset and click on the sigma icon - Σ

- Click on Add an action and choose window

- Provide details

SQL Editor: Use this to write your own queries.

- Click on validate - this will validate the expression on the dataset

- Click on save - save will run the action on the dataset

- Do Materialize/ Materialize Overwrite (as needed) to persist the updated dataset

Common Options

The following are common options for different types of enrichment functions:

- Validate - validates provided input expression and configuration. It is recommended to run validate first, verify the provided inputs, and update if required.

- Save - runs the selected actions and saves the configuration. Saving does not result in actual data being created. It is just saving the logic of applied transformations. If you need the actual data you have to materialize the dataset.

- Materialize - to persist the data. This option is available at the bottom of the xflow-action tab which can be accessed by clicking on the data enrichment icon (sigma icon - Σ ).

Alerts

Users can set up alerts based on rules for thresholds or drifts.

Threshold

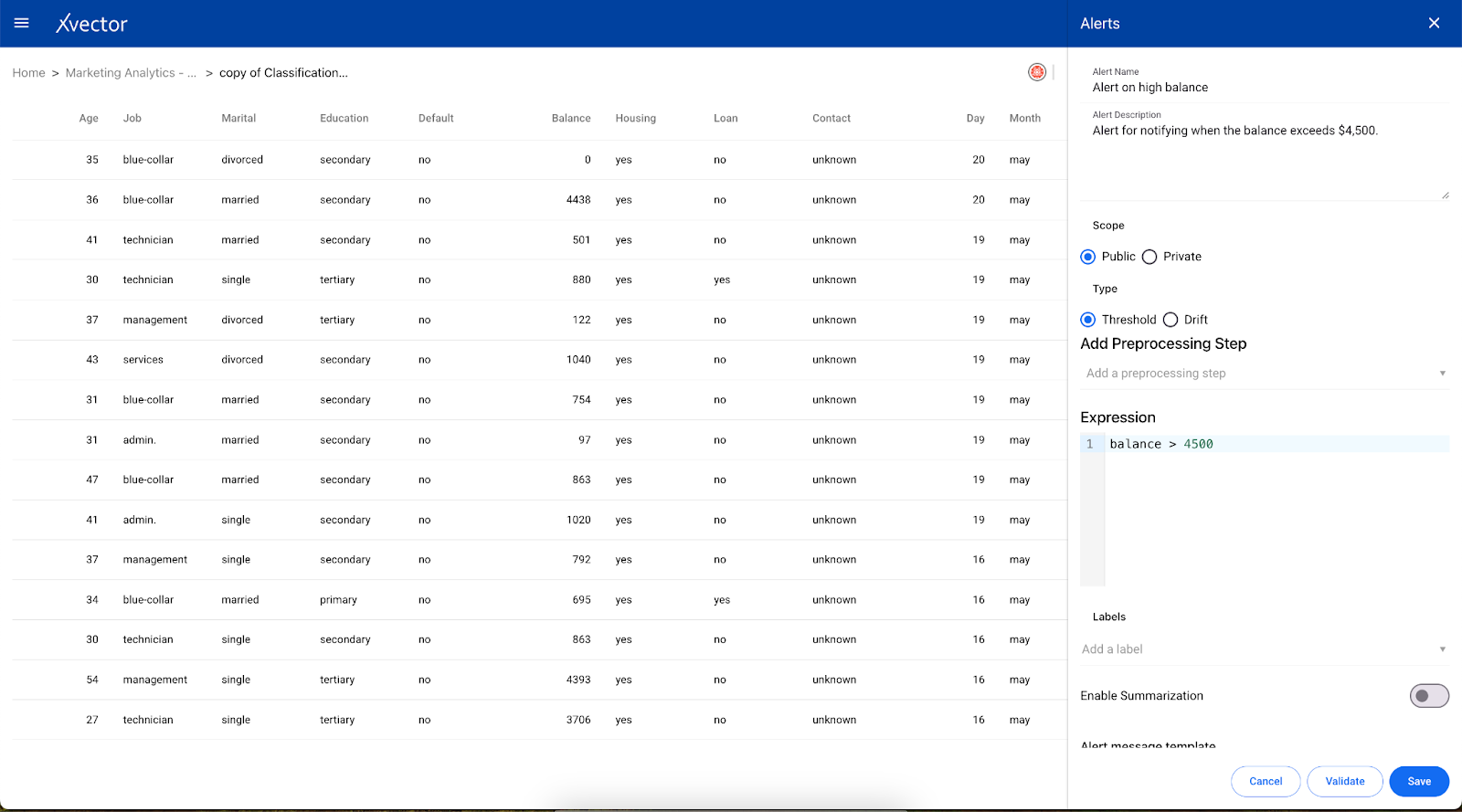

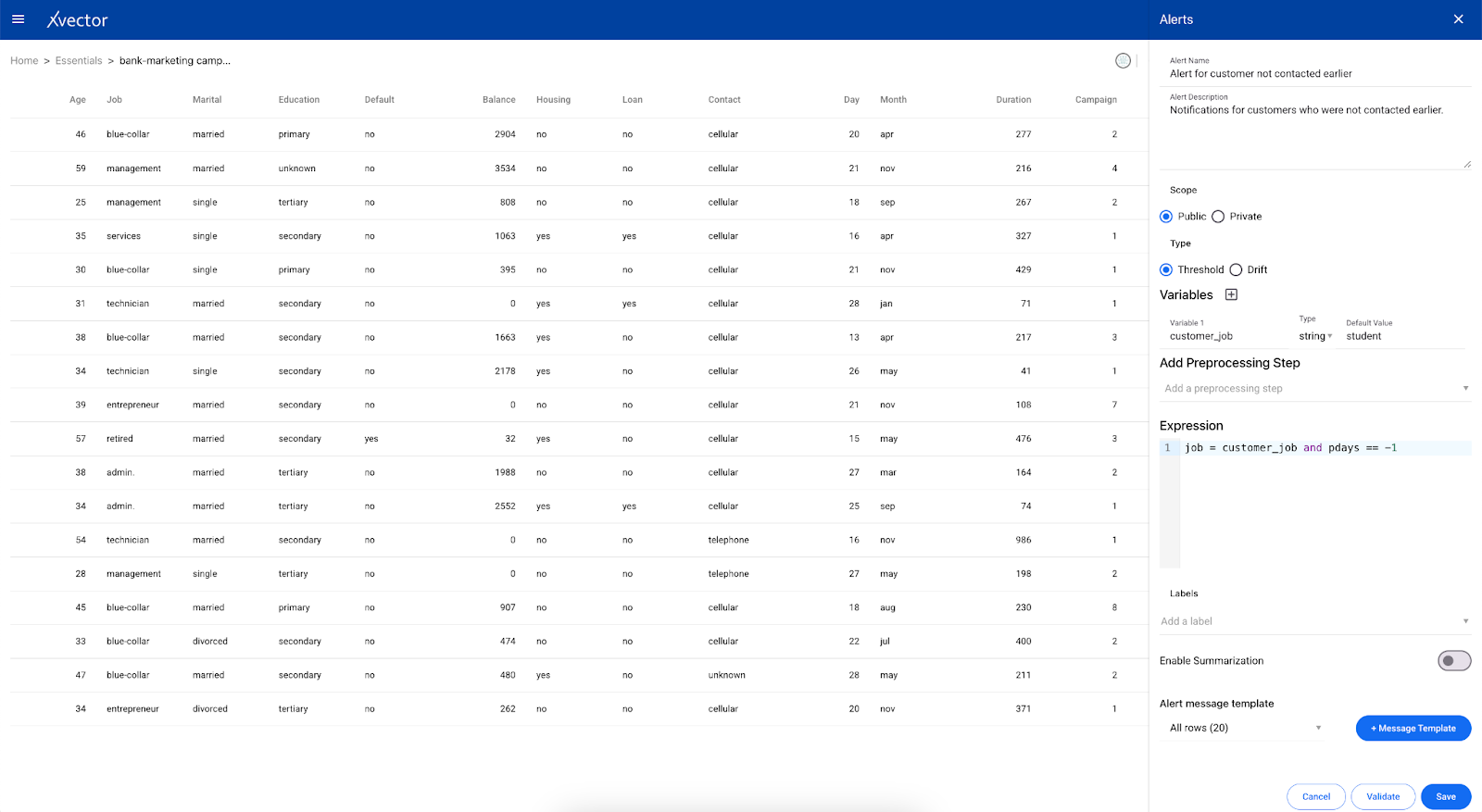

Create an alert with the following steps:

- Alert Name - provide a name for the alert

- Alert description - provide a description of the alert

- Scope - choose the alert to be either public or private

- Type - choose Threshold

- Add Preprocessing Step - Add a preprocessing step for the alert to be triggered. This is the function that is run on the dataset before triggering the alert. The function has all the choices like in the enrichment feature mentioned here. Choose one of them.

- Expression - you can provide a custom expression for the alert to run

- Validate - it’s best practice to validate your input to verify before you save.

- Save - to run the action on the dataset.

Drifts

Drafts are calculated in the context of models. They are calculated when the dataset is synchronized with the Data Source. The data source should have indices for synchronization.

This is explained further in the Models section.

Models

Model Overview

Models allow users to find patterns in data and make predictions.

Regression models, a supervised learning technique, allow users to predict a value from data. For example, given inventory, advertising spending, and campaign data, a regression model can predict a lift in sales.

Classifiers can help identify different classes in a dataset, an example being the classification of customers who will churn based on order history and other digital footprints left by the customer.

Clustering models enable users to group/cluster based on different dataset attributes. Businesses use clustering models to understand customer behavior by finding different segments based on purchasing behavior.

Time series analysis allows businesses to forecast time-dependent variables such as sales, which helps manage finance and supply chain functions better.

Natural language processing (NLP) and large language models (LLM) can extract entities, identify relevant topics, or understand sentiment in textual data.

Given the sensitivity of these algorithms to the data. The distribution underlying the data can change over time, which might lead to performance deterioration of a specific algorithm; we need a mechanism that allows for selecting the best algorithm.

In the world of xVectorlabs, building models begins with creating drivers. These drivers are the foundation - powerful libraries such as Scikit-learn/xgBoost power the algorithms. Once the driver is crafted, the next step is to create a model, a framework ready to be brought to life with experimentation.

Each model becomes a hub of exploration, where experiments are authored to test various facets of the algorithm. These experiments are, in turn, populated with multiple runs, each a unique attempt to capture and compare the parameters that drive the algorithm's behavior. Imagine tweaking the settings of a regression model—adjusting its learning rate or altering its input features - and observing how these changes shape its performance.

This structured approach organizes models as a composition of drivers, experiments, and runs, creating a seamless flow for experimentation. It allows users to adapt, learn, and refine their models precisely, uncovering insights and pushing the boundaries of their algorithms' achievements.

Once the user picks a model that fits the data best, the model can be deployed to make predictions. Models in production are then continuously monitored for performance. Anomalous behaviors are quickly identified and notified for further action.

The platform provides a comprehensive set of model drivers curated based on industry best practices. Advanced users can bring their algorithms by authoring custom drivers.

Experiments

A user can create multiple experiments under a model. An experiment includes one or more runs. Under each experiment, various parameters with available drivers can be tried on different datasets.

On updating any input parameters and triggering “re-train”, a new run under that experiment gets created.

Different runs under an experiment can be compared using selected performance metrics.

Experiments Options

One can view a list of all experiments on the experiment view page that opens on viewing a model.

- Comments - users can comment on experiments.

- Timeline - one can view the action history for the model of the selected experiment. Click on the ( i ) icon to view the timeline.

- Add a new experiment - by clicking on the ( + ) icon, one can add a new experiment to the existing mode.l

Experiment View Page

Runs

Experiments can create multiple runs with different input parameters and performance metrics as output. Based on the metric, one can be chosen for the final model.

This aims to enable a user to experiment with different model drivers, datasets, and parameters to achieve the expected performance metric for a model before deploying.

Runs Options

One can view a list of all runs on the run view page that opens on viewing an experiment.

- Comments - users can comment on experiments.

- Timeline - one can view the action history for the model of the selected experiment. Click on the ( i ) icon to view the timeline.

- Add a new run - by clicking on the ( + ) icon, one can add a new run to the existing experiment.

- View - option to view the model output report

- Drifts - displays drift report of the input data

- Build + Deploy - option to build and deploy the model run, making an endpoint available for prediction.

- Delete - option to delete the run.

- Predict - option available on the deployed model. The user can test the predicted endpoint with sample data and verify it.

- Copy URL - the model predicts the URL will be copied to the clipboard

- Shutdown - option to shutdown deployed model

- Token - a token for authenticating requests to the model predict API.

Example

Run view page

There are options at the end of each Run which are described below (icons from left to right):

View

Drifts

Performance

Model Drivers

Runs under experiment(s) are powered by underlying libraries and algorithms defined in model drivers. For example, a statistical or machine learning library such as Sci-kit Learn for a regression model can be used. The platform provides a comprehensive set of model drivers for business analysts and advanced users to analyze. In addition, a data scientist can author custom drivers.

Authoring Drivers

Users can author custom drivers for different model types like regression, classification, clustering, time series, etc. If the requirements do not fit into any one type of model, users can choose the ‘app’ type to author their custom driver.

Creating a new driver

Follow the following steps for authoring a new driver:

- From a workspace, click on Add and choose Models

- Choose Author Estimator from the available list

- Enter details-

- Name - Provide a meaningful name for the driver

- Type - choose from the available options

- Choose a base driver - choose a base driver if the new driver needs to be authored using some existing driver.

- Pretrained - set to true if the driver uses some pre-trained models and does not require training on a particular dataset.

- Scope - select from available options

.

A task will be triggered to start a Jupyter server for authoring drivers. Once resources are allocated, click on the Launch Jupyter Server button. This will open a Jupyter notebook where users can author drivers.

In the notebook, five files that are mentioned below will be present:

- train.ipynb

- predict.ipynb

- xvector_estimator_utils.py

- config.json

- requirements.txt.

Users need to write the algorithm in the train.ipynb file with the provided format. Also, modify the predict.ipynb file as required. This will be used in getting predictions. To define training or input parameters, open the config.json file in edit mode and write in the format provided.

Options on notebook-

An xVector option is present in the menu bar. Use this for different actions-

Select dataset - click on it and select the dataset for the driver. It will update the config.json file with the metadata of the selected dataset.

Register - Once the driver is authorized (train.ipynb, predict.ipynb, and config.json files have been modified correctly; this must be registered to make this driver available for use in models. Before registering, make sure all five files are present with the same name as provided.

Shutdown - this is to shut down the running Jupyter server.

Below are some models that can be created in xVector.

Regression Model

Regression is a set of techniques for uncovering relationships between features (independent variables) and a continuous outcome (dependent variable). It's a supervised learning technique, meaning the algorithm learns from labeled data where the outcome is already known. The goal is to use relationships between features to predict the outcome of new data.

Follow the below steps to create a regression model

- From a workspace, click on Add and choose Models

- Choose ‘Regression’ from the available options

- Go through the steps-

- Model

- Name - provide a name for the model

- Configure

- Experiment Name - enter the name for the experiment under that model

- Workspace - select workspace from the available list

- Select dataset - select a dataset for training the model

- Choose a driver - select a driver from the available list

- Predictor Column - select the target column for prediction

- Features

- Select features - select all the columns used for the training model.

- Parameters

- Provide values to listed training parameters.

- Advanced

- Machine type - choose machine type from the available list. Training will run on the selected machine type.

- Requisition type - select from available options.

- Model

This will start the model creation process (allocating resources, training the model, and saving output). This results in a model with experiment(s) and run(s) under it. Users can view model runs and performance metrics once the training is complete.

Example

We will train a model on Weekly Sales Data to understand the relationship between the weekly sales and other columns. This trained model can then be used to predict sales, given the values for input columns on which the model has been trained.

Click on Add and choose Models.

Choose Regression

Model Tab

Configure Tab

Features Tab

Parameters Tab

Advanced Tab

Classification Model

Classification categorizes data into predefined categories or classes based on features or attributes. This uses labeled data for training. Classification can be used for spam filtering, image recognition, fraud detection, etc.

Follow the below steps to create a regression model

- From a workspace, click on Add and choose Models

- Choose Classification from the available options

- Go through the steps-

- Model

- Name - provide a name for the model

- Configure

- Experiment Name - enter name for the experiment under that model

- Workspace - select workspace from the available list

- Select dataset - select the dataset for training the model

- Choose a driver - select a driver from the available list

- Predictor Column - select the target column for prediction. This should be the column containing the label or class values to be predicted.

- Features

- Select features - select all the columns that will be used for training the model.

- Parameters

- Provide values to listed training parameters.

- Advanced

- Machine type - choose machine type from the available list. Training will run on the selected machine type.

- Requisition type - select from available options.

- Model

This will start the model creation process (allocating resources, training the model, and saving output). This results in a model with experiment(s) and run(s) under it. One can view model runs and performance metrics once the training is complete.

Example

For example, we will train a classifier model on the auto insurance churn dataset to find whether a given individual with details will churn.

Click on Add and choose Models

Choose Classification

Model details

Configure details

Features Details

Parameters details

Advanced details

Clustering Model

Clustering is an unsupervised learning technique that uses unlabeled data. The goal of clustering is to identify groups (or clusters) within the data where the data points in each group are similar and dissimilar to data points in other groups. It can be used for customer segmentation in marketing, anomaly detection in fraud analysis, or image compression.

Follow the below steps to create a clustering model

- From a workspace, click on Add and choose Models

- Choose Clustering from the available options

- Go through the steps-

- Model

- Name - provide a name for the model

- Configure

- Experiment Name - enter name for the experiment under that model

- Workspace - select workspace from the available list

- Select dataset - select the dataset for training the model

- Choose a driver - select a driver from the available list

- Predictor Column - This is optional. Select if ground truth is available.

- Features

- Select features - select all the columns that will be used for training the model.

- Parameters

- Provide values to listed training parameters.

- Advanced

- Machine type - choose machine type from the available list. Training will run on the selected machine type.

- Requisition type - select from available options.

- Model

This will start the model creation process (allocating resources, training the model, and saving output). This results in a model with experiment(s) and run(s) under it. One can view model runs and its performance metrics once the training is complete.

Example

For example, we will train a clustering model on the online retail store dataset to identify and understand customer segments based on purchasing behaviors to improve customer retention and maximize revenue.

Click on Add and choose Models.

Choose Clustering

Model details

Configure details

Features details

Parameters details

Advanced details

Time Series Model

Time series analysis is a technique used to analyze data points collected over time. It's specifically designed to understand how things change over time. The core objective of time series analysis is to identify patterns within the data, such as trends (upward or downward movements), seasonality (recurring fluctuations based on time of year, day, etc.), and cycles (long-term, repeating patterns). By understanding these historical patterns, time series analysis can be used to forecast future values. This is helpful in various applications like predicting future sales, stock prices, or energy consumption.

Follow the below steps to create a time series model

- From a workspace, click on Add and choose Models

- Choose Timeseries Analysis from the available options

- Go through the steps-

- Model

- Name - provide a name for the model

- Configure

- Experiment Name - enter name for the experiment under that model

- Workspace - select workspace from the available list

- Select dataset - select the dataset for training the model

- Choose a driver - select a driver from the available list

- Date column - select the date column in the dataset

- Forecast Column - select the forecast column.

- Parameters

- Provide values to listed training parameters.

- Advanced

- Machine type - choose machine type from the available list. Training will run on the selected machine type.

- Requisition type - select from available options.

- Model

This will start the model creation process (allocating resources, training the model, and saving output). This results in a model with experiment(s) and run(s) under it. One can view model runs and performance metrics once the training is complete.

Example

For example, we will train a time series model using Weekly Sales Data for Store_1. This takes the date column as input and forecasts weekly_sales.

Click on Add and choose Models.

Choose Timeseries

Model details

Configure details

Parameters details

Advanced details

Sentiment Analysis

Sentiment Analysis is the process of computationally identifying and classifying the emotional tone of a piece of text. It's a subfield of natural language processing (NLP) used to understand the attitude, opinion, or general feeling expressed in a text.

Follow the below steps to create a sentiment analysis model

- From a workspace, click on Add and choose Models

- Choose Sentiment Analysis from the available options

- Go through the steps-

- Model

- Name - provide a name for the model

- Configure

- Experiment Name - enter name for the experiment under that model

- Workspace - select workspace from the available list

- Select dataset - select the dataset for training the model

- Choose a driver - select a driver from the available list

- Text data Column - select the text data column

- Features

- Select features - This step can be skipped for sentiment analysis drivers.

- Parameters - provide values to listed training parameters.

- Advanced

- Machine type - choose machine type from the available list. Training will run on the selected machine type.

- Requisition type - select from available options.

- Model

This will start the model creation process (allocating resources, training the model [if the driver is not pre-trained], and saving output). This results in a model with experiment(s) and run(s) under it. One can view model runs and performance metrics once the training is complete.

Example

We will create a model to find reviews' sentiment in Clothing E-Commerce Reviews data. For this, we are using a pre-trained driver.

Click on Add and choose Models

Choose Classification

Model details

Configure details

Features Details

This step is skipped for sentiment analysis

Parameters Details

Advanced details

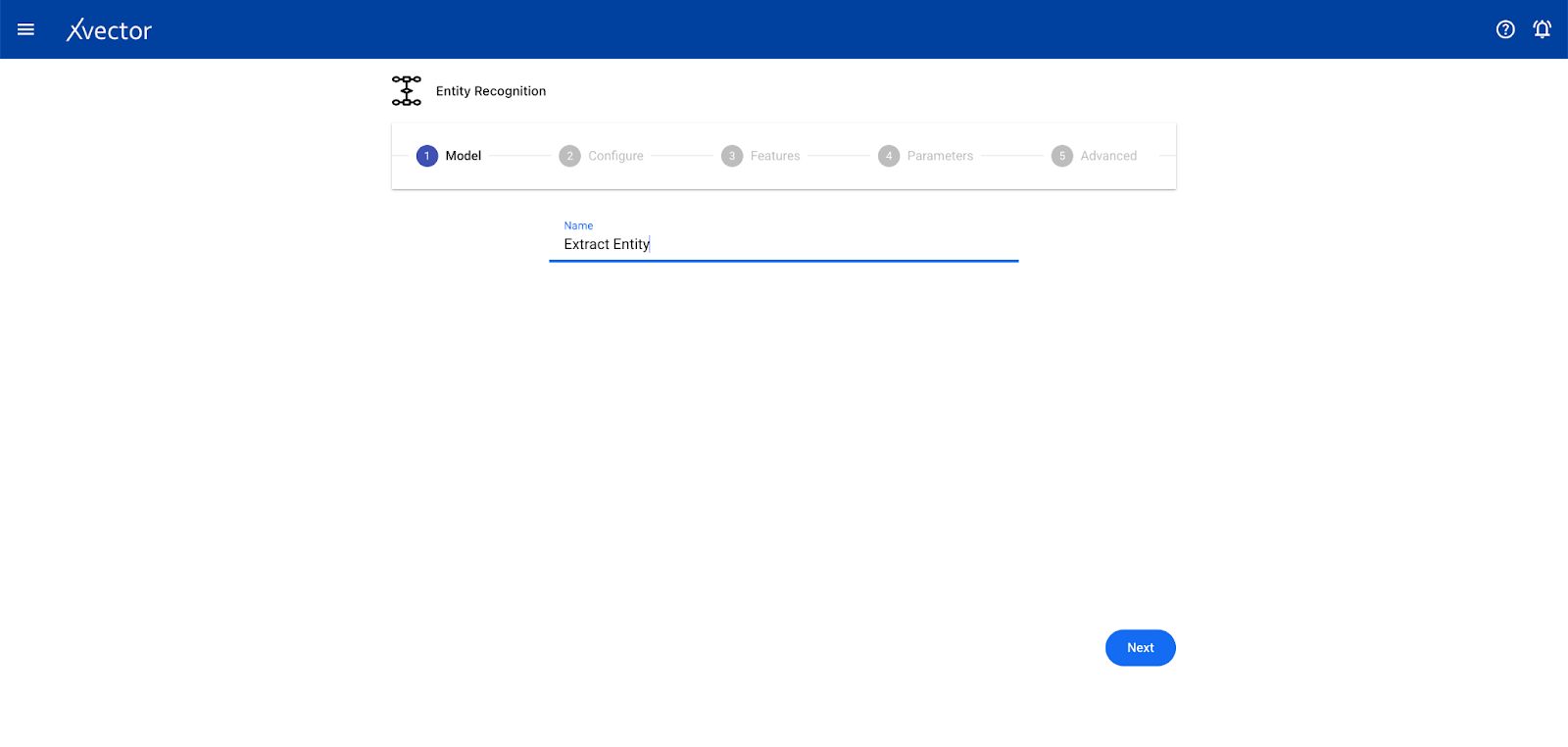

Entity Recognition

Entity Recognition is a sub-task within Natural Language Processing (NLP) that identifies and classifies essential elements in text data. It helps identify key information pieces like names, places, organizations, etc. NER automates this process by finding these entities and assigning them predefined categories.

Follow the below steps to create an entity recognition model

- From a workspace, click on Add and choose Models

- Choose Entity Recognition from the available options

- Go through the steps-

- Model

- Name - provide a name for the model

- Configure

- Experiment Name - enter name for the experiment under that model

- Workspace - select workspace from the available list

- Select dataset - select the dataset for training the model

- Choose a driver - select a driver from the available list